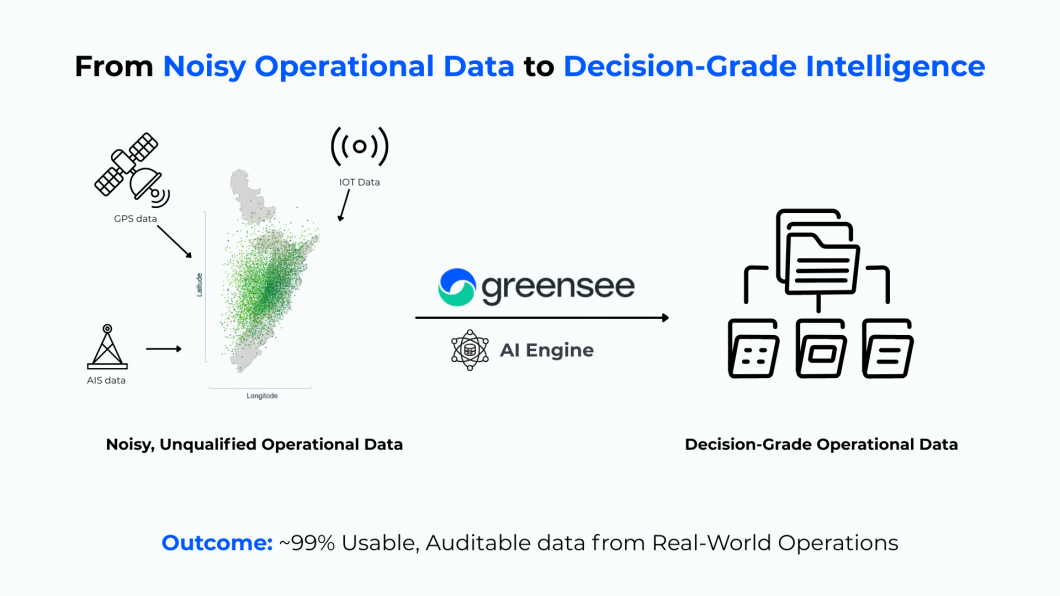

AI-Driven Data Qualification for Industrial and Logistics Operations

Executive Summary

Industrial IoT data is inherently noisy.

This is not a technology failure. It is the natural result of deploying sensors across real operating environments, including ports, vessels, trucks, terminals, and warehouses, where assets are exposed to vibration, weather, handling, power instability, and intermittent connectivity.

Across large-scale deployments, organisations routinely observe:

- 20–30% missing or incomplete data points

- 5–10% corrupted or anomalous values

Timestamp drift, duplicated records, frozen sensors, and bias accumulation

At the same time, business-critical use cases such as regulatory compliance, emissions reporting, energy optimisation, predictive maintenance, and AI-driven decision-making assume data reliability.

This white paper explains how Greensee consistently transforms raw IoT time-series data into 99.5% usable, decision-grade data, and why scale, domain intelligence, and experience matter more than generic data cleaning tools.

1. The Reality of Field IoT Data

IoT data collected in the field behaves very differently from laboratory or test-bench data.

Common sources of disruption include:

- Connectivity gaps caused by roaming, dead zones, or satellite latency

- Sensor degradation over time due to ageing hardware and calibration drift

- Operational artefacts such as loading shocks, crane handling, and power cycling

- Human intervention, including manual overrides, unplugging, or misconfiguration

These conditions create data patterns that are often misleading rather than obviously wrong:

- Flatlines that appear stable but indicate frozen sensors

- Sudden spikes caused by electromagnetic interference or handling events

- GPS jumps covering kilometres in seconds

- Energy readings that contradict physical constraints

- Temperature values that violate thermodynamic behaviour

Treating these issues as rare anomalies underestimates how frequently they occur at scale.

- Why Traditional Data Cleaning Fails

Many organisations rely on rule-based or generic approaches to clean IoT data:

- Static thresholds

- Rolling averages

- Off-the-shelf anomaly detection libraries

- Manual post-processing in spreadsheets

These methods fail because they ignore operational context and physical reality.

The same signal can mean very different things depending on circumstances:

- A temperature spike may indicate sensor failure in a warehouse, but be expected during a defrost cycle

- A power drop may signal a malfunction at sea, but be normal during terminal unplugging

- A stationary GPS position may be an error, or a legitimate dwell event

Without contextual intelligence, data cleaning becomes guesswork. Worse, it can silently remove valid data or preserve invalid data, undermining trust in analytics and AI outputs.

3. Data Qualification Before Data Cleaning

At Greensee, data quality starts with data qualification, not deletion.

Every incoming data point is evaluated across four dimensions:

- Physical plausibility

Alignment with laws of physics, energy balance, and thermal inertia - Operational context

Asset state, mode of operation, location, and known workflows - Temporal consistency

Rate of change, persistence, seasonality, and historical patterns - Cross-signal coherence

Correlation with related sensors and signals

Only after this qualification process is a data point classified as:

- Valid

- Recoverable

- Correctable

- Non-usable

This approach maximises data recovery while maintaining analytical integrity.

4. Learning from Ground Truth at Scale

Greensee’s advantage does not come from a single algorithm. It comes from accumulated operational ground truth.

Our models are trained on:

- Billions of time-series data points

- Thousands of documented operational incidents

- Labelled maintenance, inspection, and audit events

- Multi-OEM, multi-sensor, multi-geography datasets

This enables capabilities such as:

- Context-aware outlier detection

- Probabilistic gap filling that respects physical constraints

- Signal reconstruction using correlated variables

- Dynamic confidence scoring at the data-point level

As new data flows in, models continuously improve, reducing false positives and increasing trust over time.

5. A Purpose-Built Qualification Library

Greensee has developed a proprietary library of data qualification modules designed specifically for industrial and logistics IoT use cases.

These include:

GPS and geofencing

- Drift detection

- Impossible speed filtering

- Terminal-aware correction

Shock and vibration

- Differentiation between handling events and damage

- Frequency-based classification

Temperature

- Defrost cycle recognition

- Thermal inertia modelling

- Separation of ambient and cargo effects

Power and energy

- Load consistency validation

- Plug and unplug inference

- Phase imbalance detection

Humidity

- Sensor saturation correction

- Condensation artefact filtering

Each module embeds engineering knowledge, not just statistical patterns.

6. Why Data Volume and Experience Matter

High data quality cannot be achieved with limited datasets.

Scale matters because:

- Rare failure modes only appear over large fleets

- Edge cases define system robustness

- Long-tail behaviours dominate real operations

Greensee processes data across:

- Multiple years of operation

- Diverse climates and infrastructures

- Numerous sensor models, firmware versions, and OEMs

This exposure improves priors, accelerates model convergence, and significantly reduces false positives.

Data quality is not a feature. It is a learned capability.

7. What 99.5% Data Quality Enables

Near-perfect data quality unlocks tangible business outcomes:

- Reliable AI and predictive analytics

- Defensible GHG and energy calculations

- Audit-ready regulatory reporting

- Accurate KPIs and benchmarking

- Confidence across operations, finance, and compliance teams

Most importantly, it allows organisations to act on data rather than debate its validity.

Conclusion

IoT data will always be messy.

Ignoring that reality leads to fragile analytics and failed AI initiatives. Addressing it requires more than generic tools. It requires operational intelligence, physics-aware models, and experience at scale.

Greensee’s decade-long expertise in time-series processing, combined with AI and real-world industrial data, enables a different outcome: 99.5% data quality, in production, in the field, at scale.

Because AI is only as good as the data you trust.

About Greensee

Greensee designs and operates AI-driven data platforms for logistics, maritime, and industrial ecosystems, specialising in high-volume time-series processing, energy optimisation, and decarbonisation analytics.